How do we actually integrate AI into our workflows and ensure the team embraces it?

If you’ve run tech demos, hosted lunch-and-learns, and evangelized AI to your team, but still haven’t seen meaningful adoption: you’re not alone. This is one of the most common challenges I hear from leaders.

Often, the problem isn’t tools or training (although that matters, too). It’s culture.

People are nervous, and that’s understandable.

If your team is hesitant about AI, consider the bigger context: People are seeing headlines about job losses and layoffs. They’re watching companies announce record profits and workforce reductions in the same breath. Understandably, some are worried that embracing AI means training their own replacement.

Others may quietly be experimenting with AI already, but feel like they can’t talk about it. They don’t want to appear as if they’re “cheating” or automating too much of their role.

The result? You get hidden adoption, surface-level engagement, and stalled momentum.

If you want transparency from your team, you have to offer it first.

You don’t need all the answers. But you do need to start the conversation. Be clear about what AI means for your team.

A few prompts to clarify your thinking and guide your messaging:

- AI vision: What role will AI play in our organization? Are we using AI to scale the business or shrink it? Are we being honest (with ourselves and our team) about what that means for career paths and retention?

- AI value: What value is AI creating in our organization, and how might we redistribute that value (e.g. compensation, work/life balance, etc.)?

- Org chart: How might AI impact our org chart over the next year or two? How will existing roles evolve?

- Employee training & support: Have we considered future roles, responsibilities, skills? Are we supporting our employees' path toward them?

- Modeling: Are company leaders using AI in their work—and talking about it openly? Are they sharing both successes and failures? Are we rewarding AI experimentation... or quietly discouraging it?

A culture of experimentation, honesty, and trust starts at the top, and may do far more for your AI adoption than any prompt engineering workshop.

We have a lot of ideas for using AI, but we’re struggling to get started. How do we prioritize them?

Possibility overload is real. One of the biggest blockers I see in companies right now is decision paralysis.

Teams are stuck debating which use cases to pursue. Meanwhile, the clock is ticking—and AI disruption isn’t waiting for you to all get on the same page.

So here’s how I recommend reframing the challenge:

✅ Start with the cost of inaction.

Ask: What’s most at risk of disruption right now?

By mapping the risks (and opportunities) of AI across your services and operations, you can prioritize what matters most, now—and stop spinning your wheels.

How this might look: The management team sets a recurring meeting schedule. Each meeting focuses on a specific area of the business to discuss the near-term disruption potential (low-medium-high) and decide next steps.

Focus areas might include: Service offerings, operations and talent alignment, service mix and growth bets, differentiation and positioning, service packages and pricing, talent pipeline and recruiting.

💡Pro tip: Bring in a third party to help. They can provide:

- Objectivity and focus: A clear outside perspective to help your team prioritize what matters most and avoid optimizing the wrong things.

- External insight: Market and AI trend analysis to anchor decisions in real data—not just internal opinions.

- Facilitation and alignment: Structured conversations that get leadership unstuck and create buy‑in across teams.

- Momentum: Translating insights into clear next steps, so the work doesn’t stall in endless debate.

The goal is to stop spinning your wheels, and start moving forward with clarity and confidence.

How should we approach AI? Should we optimize current processes now—or wait until we have a full AI strategy?

You don’t have to choose. You can (and should) take a two-pronged approach. For example:

1️⃣ Big-picture strategy

This is your leadership track. It’s focused on:

- Defining your vision and positioning

- Assessing service-level disruption

- Evaluating pricing, packaging, ops, and org design

It moves at a slower, more thoughtful pace—but sets the long-term direction.

2️⃣ Operational enablement

This is your team track. It’s focused on:

- Quick wins and workflow improvements

- Upskilling and experimentation

- Creating space, capacity, and confidence

It builds momentum while the strategy work unfolds.

For best results, tackle each in separate meetings with relevant stakeholders so your discussions stay focused and on track.

What’s a good tool for ______?

I get asked about tools a lot (and we'll have my newest recommendations in the next issue).

But as the number of AI startups explode, here’s a simple 4-part checklist for vetting AI tools:

✅ 1. Legal & IP Review

- Commercial use: Does the tool grant rights to use the output commercially?

- Ownership: Do you retain IP rights, or does the tool claim any license over your outputs?

- Privacy & security: Is your input data (e.g., files, images, notes, prompts) kept private?

✅ 2. Practical & Technical Fit

- Pricing model: Are you paying per use? Per seat? For outputs? Watch for hidden fees.

- File compatibility: Can it export in formats your team actually uses?

- Branding: Does it watermark outputs or restrict resolution?

✅ 3. Quality Testing

- Accuracy & fidelity: How are the quality and accuracy of responses? Do images or videos look professional and on-brand? etc.

- Alignment: Can it replicate your firm’s style, not just generate generic outputs?

- Speed: Does it actually save time—or just shift the work elsewhere?

✅ 4. Company Viability

- Funding and investors: Who’s backing this tool? Are they likely to be around in 12 months?

- Reviews: What do ratings and reviews say? Do they have the social proof to back up their claims?

How do I figure out a better system for using AI — one that actually fits how I work?

If AI feels more confusing the deeper you go... welcome to the club.

There are tools for everything. And 47 different ways to use them. So how do you build a system that actually works for you?

Start by asking four key questions:

1️⃣ Where does the information live?

For example:

- Google Workspace (Docs, Sheets, Slides, Gmail, Drive): Gemini for Google Workspace is usually the best fit since it works natively on those files.

- Dropbox: Start with Dropbox AI features if the info is already stored there (e.g., summarizing a folder or searching files).

- Other formats (Word, PDFs, images, etc.): Consider dropping them into ChatGPT/NotebookLM for analysis.

👉 Ask: “Do I want to analyze the file where it already is, or is it worth it to move into another tool?”

2️⃣ How dynamic is the content?

- Static or reference material (e.g., company SOPs, research reports): NotebookLM works well since it builds a persistent “notebook” you can query repeatedly.

- Dynamic, fast-changing (e.g., live sales data, financial tracking): In-app AI tools like in HubSpot, Salesforce, Gemini for Google Workspace keep things in sync without re-uploading.

- One-off text dump: ChatGPT or Gemini is quick and easy.

👉 Ask: “Do I need this to stay up-to-date or just get quick answers?”

3️⃣ What are you trying to do?

- Brainstorm or write? → ChatGPT or Custom GPT.

- Analyze numbers? → Gemini in Sheets.

- Synthesize research? → NotebookLM or Deep Research.

👉 AI tools have different features and advantages. Ask: “What is my goal? What tool or model will help me accomplish that?”

4️⃣ How important is ease vs. power?

- Ease: Use AI where you already work (Gemini in Workspace, Dropbox AI).

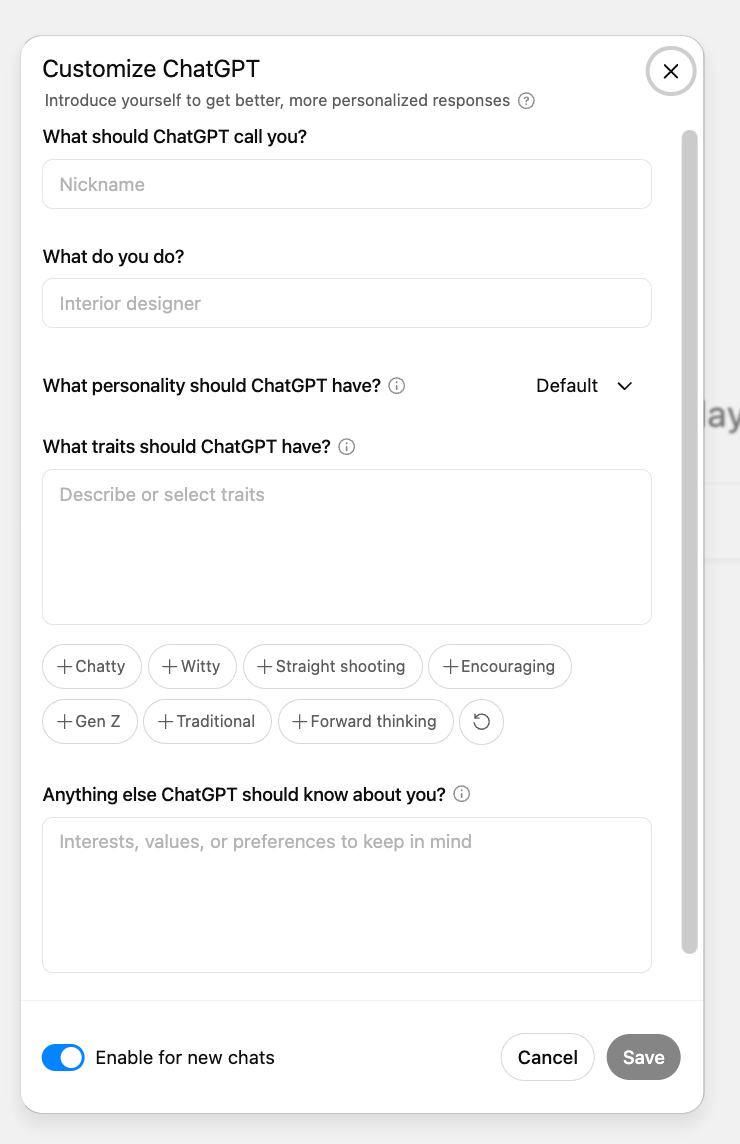

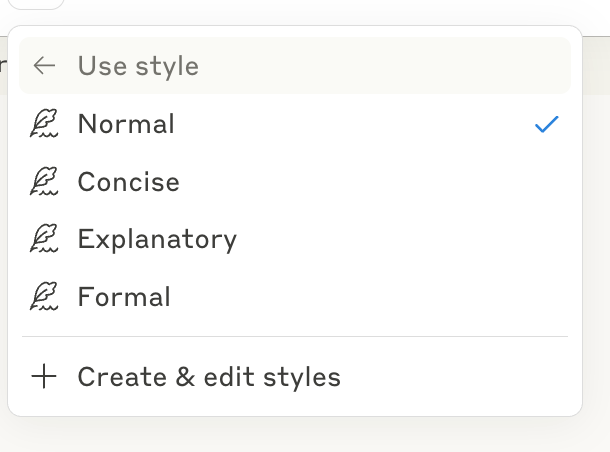

- Power / flexibility (custom instructions, advanced prompting, multiple formats): Use a frontier model (Gemini, ChatGPT), a custom GPT/Gem, or a specialized tool.

👉 Ask: “Do I want the easiest path—or the most capable tool?”